As solar energy transitions from a niche industry to a cornerstone of the global energy mix, the margin for error in PV project development has become increasingly narrow. Projects are designed to operate close to technical and financial limits, meaning that inaccurate inputs or weak due diligence can have long-term consequences for both performance and profitability.

One of the most critical inputs in PV project planning is solar resource and meteorological data. Choosing the right dataset is far from trivial: the quality of the data and the expertise behind it influences every stage of the project. It directly determines whether a project meets its expected returns, passes due diligence and operates reliably.

Try Premium for just $1

- Full premium access for the first month at only $1

- Converts to an annual rate after 30 days unless cancelled

- Cancel anytime during the trial period

Premium Benefits

- Expert industry analysis and interviews

- Digital access to PV Tech Power journal

- Exclusive event discounts

Or get the full Premium subscription right away

Or continue reading this article for free

When evaluating potential partners, it is essential to apply rigorous criteria—something that can be difficult without a solid understanding of geoscience and engineering.

Demand physics-based, validated time series data

Many datasets still used in the industry are generated synthetically from multiple aggregated data sources. Such datasets may appear plausible but cannot be validated against on-site measurements or traced back to physical reality.

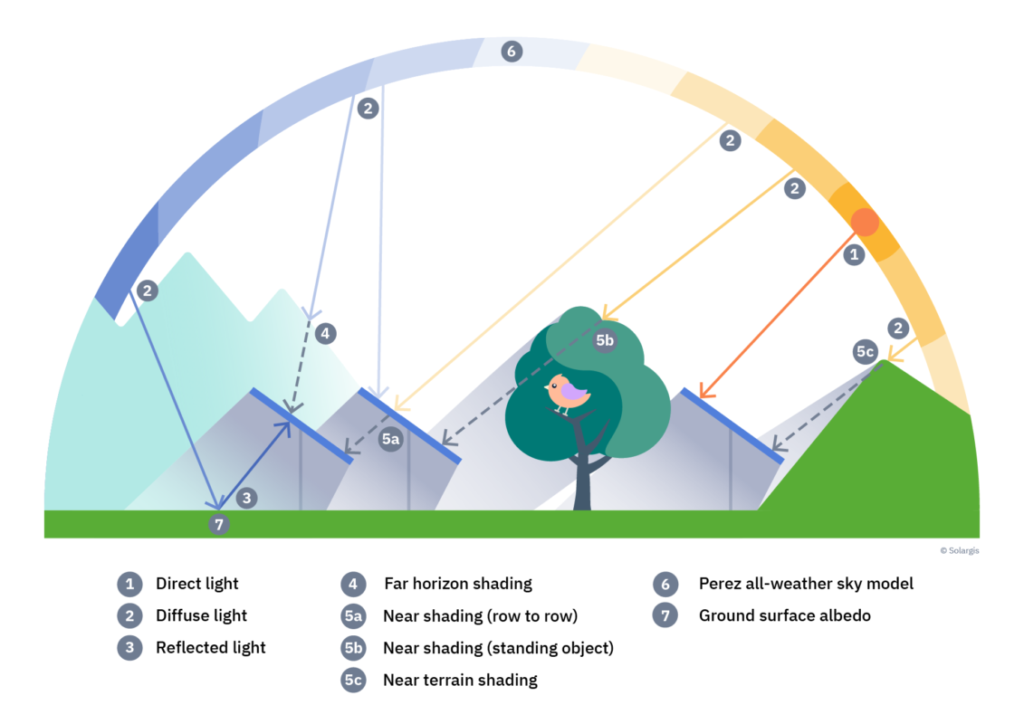

Reliable datasets must be rooted in physical modelling, derived from global satellite and atmospheric data, and processed into high-resolution time series. Modern datasets should be validated against high-quality ground measurements available at sub-hourly resolution. The best physics-based datasets capture the full dynamics of local climate conditions.

Datasets generated purely through empirical correlations or synthetic ‘patchwork’ methods are not fit for purpose. They cannot be independently validated against ground measurements and often contain hidden inconsistencies. Aggregated data based on subjective or non-transparent methodologies should be treated as a red flag.

Insist on high temporal resolution data

Short-term fluctuations in solar irradiance must be considered when sizing equipment, scheduling energy delivery and evaluating performance. Datasets aggregated into monthly or hourly averages obscure short-term variability and extremes, often leading to underperformance, inverter shutdowns or even equipment damage.

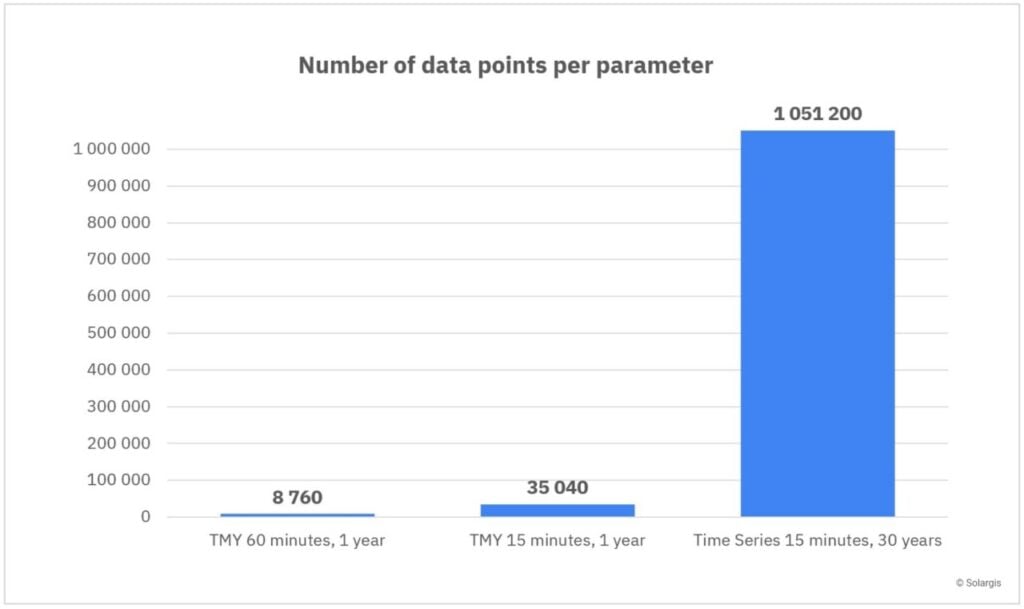

Two datasets may show similar annual yields but differ significantly at the sub-hourly level. These differences directly impact system design, inverter sizing, energy storage performance and the accuracy of operational forecasts.

High-resolution (1–15 minute) time series data reveal whether performance issues stem from specific weather conditions, extreme events, or suboptimal design. Just as an ECG offers deeper insight into heart performance than a simple pulse check, granular data is essential to identify the true strengths and weaknesses of a project design.

My advice is to obtain 1- or 15-minute resolution data for both PV design optimisation and energy modeling. Modern cloud computing offers large-volume data computing at unprecedented speed and accuracy.

Use the longest possible historical time series to capture interannual variability and trends

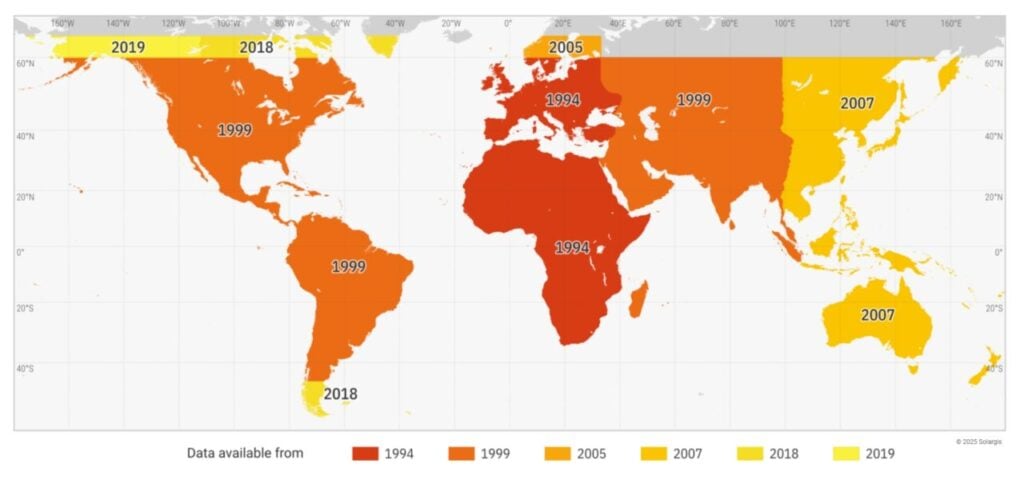

To accurately represent year-on-year variability and climate trends at a project site, a time series should ideally span 20–30 years or more.

Short data records (such as ten years) fail to capture the full range of variability and long-term trends. Due to multi-year weather cycles and changes in human activity, recent weather data alone does not represent the future. As extreme weather events become more common, a robust dataset should provide both a long-term view and high granularity to capture long-term fluctuations and trends such as rising temperature, changing aerosol levels or increasing frequency of extreme weather events.

Over the last two to three decades, we have witnessed a dramatic evolution in human activities that impact atmospheric pollution. Industrialisation, land use changes, changes in energy mixes, more stringent industrial policies, the increased use of electricity and the growth of electric vehicles are all contributing factors. These changes are also a cause for changing solar radiation patterns.

Only a long history of data can help us better understand the future. Long-term data serves as a stable reference for monitoring PV plant performance throughout its operational lifetime. While some argue that long-term data is less relevant due to climate change, the opposite is true: it is precisely these long-term records that allow us to detect, quantify, and understand such changes.

Ensure the data can be reused for future performance validation

Bankable projects are not static. Modern satellite-based solar models provide a long history of time series data, but also offer updates in near real time. Such models generate a consistent stream of data that can be used in project development, but also for regular performance monitoring over its entire lifetime.

After a PV power plant is commissioned, operators should be able to compare new ground measurements with the same data source used during development to detect anomalies and identify the root causes of any underperformance.

This is only possible when datasets are transparent, stable and based on sound physical principles. The scientifically rigorous model outputs can always be verified with on-site measurements. In contrast, if the dataset is a one-off synthetic product or derived from arbitrary adjustments, future verification becomes impossible. The inability to ‘close the loop’ between design assumptions and operational reality exposes projects to unquantifiable risk.

Evaluate the consultant’s expertise and track record

A dataset is only as good as the people and processes behind it. Reliable results require experts who understand both the physics and the limitations of data derived from models and measurements. A credible consultant or data provider should demonstrate deep expertise in solar and meteorological data methodologies and how these influence energy modeling. They should also follow the trends and be up-to-date on the latest technology.

If someone tells you that 12 long-term averages or hourly typical meteorological year (TMY) datasets are “good enough,” be cautious. That may have been acceptable years ago, but the industry has evolved. With increased computational power and access to high-quality, large-volume data, the bar is now higher. Just as the electricity market has evolved, so too have the tools and methods we use. Those who rely on outdated principles are unlikely to keep up.

It’s also important to verify that your provider has a long-standing industry presence, a proven track record of successful projects, and the capability to support projects beyond commissioning. Experienced experts continuously follow innovation in satellite-based solar modeling and high-performance PV computing.

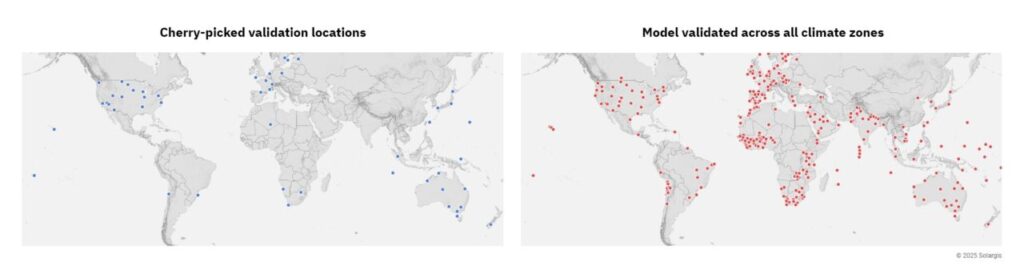

Avoid ‘cherry-picked’ results

Some consultants present multiple datasets or subjectively adjust parameters to produce a desired outcome. Such practices are unscientific and compromise project integrity.

A robust approach produces a single, traceable result that reflects the best available science, not a result that most conveniently aligns with short-term business objectives.

Investors should be wary of reports that rely on vague justifications or unexplained tuning factors. Every assumption should be defensible, every dataset auditable and every result reproducible.

It’s always useful to ask confirmation questions, such as:

- What is the typical uncertainty of this dataset in my region?

- How is the data validated against high-resolution ground measurements?

- Will this dataset pass technical due diligence when the project is sold or refinanced?

Recognise that data quality is a small cost with a large impact

The cost of acquiring high-quality solar and meteorological data is negligible compared to the total capital expenditure of a PV project. Cutting corners at this stage by choosing a cheaper dataset or consultant can lead to underperformance, disputes with financiers or costly redesigns down the line.

Much like constructing a building on weak foundations, starting a PV project with unreliable data creates systemic risks that are expensive or impossible to fix later. Doing things properly from the start is not only prudent but ultimately far more economical.

Doing things properly is rarely much more expensive. But fixing poor decisions later can be extremely costly, or even impossible. Cutting corners in PV development is tempting, but dubious data leads to poor design decisions, damages, unreliable financial models and underperforming assets that burden owners for decades.

Scientifically sound data and expert consulting are strategic assets that pay off manyfold. A credible partner should combine deep expertise, active scientific engagement (or at least being up to date with the latest trends and capabilities) and a proven track record.

Work with data providers and consultants who are there for the long haul: who build projects on validated, physics-based models, projects that are more likely to perform as expected, pass lender due diligence and deliver reliable returns.