The integrity of a PV project largely depends on the quality of the solar, meteorological and environmental data used. Whether modeling PV performance, optimising system design or assessing financial risk, the foundation of decision-making rests on one crucial question: Are we using the best available science to characterise real-world solar resource conditions, or are we continuing to rely on legacy approaches and black-box assumptions?

As much as it pains me to admit, many players in the solar energy industry still work with empirical, low-quality solar datasets, built on various simplifications, often with crucial details being opaque or unvalidated. In energy modelling, a patchwork of solar datasets is used – some are so outdated, they belong in a museum.

Try Premium for just $1

- Full premium access for the first month at only $1

- Converts to an annual rate after 30 days unless cancelled

- Cancel anytime during the trial period

Premium Benefits

- Expert industry analysis and interviews

- Digital access to PV Tech Power journal

- Exclusive event discounts

Or get the full Premium subscription right away

Or continue reading this article for free

Even worse, there are some in the industry who cherry-pick data that best supports their business case, then invent ’expert’ justifications to defend those choices.

However, as solar energy becomes a mainstream source in markets that demand accuracy, transparency and accountability, this approach is not only wrong, it is also dangerous. Solar developers must move decisively toward scientifically rigorous solar model data if it is to deliver on its promise of sustainability and resilience.

Why empirical solar modeling and ad-hoc approaches fall short

Simple empirical models, by definition, depend on statistical correlations, not physical causality. They may work in familiar and simple conditions, for which they were initially developed, but they break down when applied to new geographies. Some consultants routinely mix and match solar data from different sources and convert them into synthetically generated hourly data.

These approaches are subjective and result in unrealistic, misleading data products that fail in complex environments with high solar variability. This is not physics-based modeling; it’s guesswork.

This practice is neither traceable nor reproducible – key attributes of scientific approaches. When physical relationships are violated, such as the fundamental balance between global, direct and diffuse irradiance, it’s like a three-legged stool with mismatched legs: it somehow stands, but not straight, nor is it safe to sit on.

The case for physics-based solar modeling

Physics does not bend to business cases – and that is its greatest strength. It reveals the truth.

When solar irradiance is modeled using scientific principles – following the radiative laws in the atmosphere; accounting for attenuation by aerosols and water vapor; and incorporating cloud dynamics and high-resolution terrain data – the results are not only physically grounded but also reproducible and independent.

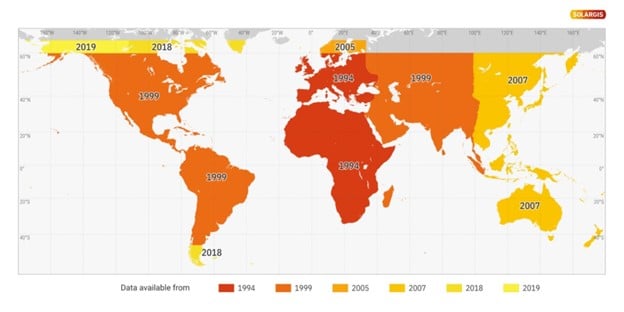

Modern solar models are built upon rigorous scientific equations, drawing on data from meteorological satellites, global weather models and other geospatial datasets. These models are designed to perform accurately across all climate zones. Machine learning has emerged as a next-level approach in solar modelling, particularly where physical models and globally available input data begin to reach their limitations.

The rigorous physics-based models do not require further manipulation to produce acceptable results. Instead, they can be validated against high-quality ground measurements and, for local conditions, refined through site-specific model adaptation, rather than speculative tuning. This level of scientific rigor is increasingly demanded by financial stakeholders, a welcome development that strengthens the integrity of the solar industry.

Lenders and investors are no longer satisfied with generic models. They now require objective, physics-based simulations that are transparent and can be independently validated. Many even request formal ‘reliance letters’ as evidence that the data and modeling practices behind a project’s financial case are science-driven and free from commercial bias.

This shift is helping to eliminate providers that offer unverified or inconsistent data, improving overall credibility across the market.

From Linke Turbidity to new generation solar models

About 15 years ago, the Linke Turbidity Factor was commonly used to estimate how aerosols in the atmosphere reduce the amount of sunlight reaching the ground. This atmospheric parameter gives a rough idea of how ’polluted’ the atmosphere is by comparing it to a number of clean, dry atmospheres that would cause the same dimming of sunlight.

But in practice, it was hard to get accurate values because clear-sky measurements were rare, and the data was often limited to monthly averages. These models also had low geographic availability and, because of poor resolution, couldn’t account for local conditions, making them too simplistic for accurate solar resource assessment.

Today, modern physics-based models go much further. The new generation of solar models simulates the transmission of solar radiation through the atmosphere using detailed atmospheric data and complex cloud interactions sourced from global weather models and satellite observation systems.

This enables accurate solar irradiance estimates even in highly complex regions, such as West Africa, Indonesia, Northern India, or the Amazon Basin – areas where empirical models have historically failed due to high short-term weather variability and critical weather events. It is important to note that the experience gained through years of working on solar projects worldwide has greatly contributed to the development of more reliable solar data.

Crucially, the modern solar radiation models are sensitive. They can detect local anomalies like wildfire smoke or industrial haze, but that sensitivity also demands care: the more advanced the model, the more it relies on high-quality input data and expert calibration. Think of them like high-performance engines – they deliver more, but only if properly controlled and maintained.

What should solar developers and investors demand?

To design bankable, high-performing PV projects, stakeholders must be more selective when choosing solar data providers. This means:

- Rejecting empirical ’patchwork’ datasets: Mixing data from different sources introduces errors that cannot be reconciled.

- Relying on validated and traceable models: Every assumption must be defensible, every dataset auditable, and every calculation reproducible. Subjective tweaks and data manipulation pave the way for underperformance or even damage of PV assets.

- Using global models with local accuracy: A well-designed physics-based model, validated across all geographies, works just as well in Texas as it does in New York, South Africa or India. Nature doesn’t change across borders.

In a market that increasingly values accuracy, those who invest in scientifically sound solar radiation data gain a competitive edge. They deliver PV systems that not only perform in theory, but also in practice. They avoid surprises from subjectively manipulated and unvalidated data. Most importantly, they build trust.

The PV industry needs to continually build its position with investors, lenders, regulators and grid operators who want to rely on PV as a solid partner on the path to the energy transition.

The solar data industry doesn’t need more assumptions. It needs science.