The need to replace fossil fuels with renewables and replace high electricity consumption and wastage with more energy efficient systems means there will need to be new kinds of grids.

Or rather, grids will have to adapt. Handling distributed generation resources like solar, wind, EVs, demand response and energy storage, and co-ordinating them, from high voltage transmission level down to PV plant monitoring to the smart meters that are being increasingly rolled out, is going to be a tough balancing act.

Try Premium for just $1

- Full premium access for the first month at only $1

- Converts to an annual rate after 30 days unless cancelled

- Cancel anytime during the trial period

Premium Benefits

- Expert industry analysis and interviews

- Digital access to PV Tech Power journal

- Exclusive event discounts

Or get the full Premium subscription right away

Or continue reading this article for free

Solar advocates and industry groups rightfully point to reluctance from some utilities to embrace renewables, sensing an existential threat to business models and revenues. However, it is also undeniably true that integrating variable renewable energy, coping with new technologies like rapid chargers for EVs and keeping the distributed network of the future stable presents a challenge.

“It seems that every day we read new stories of declining electricity demand due to the rapid expansion of both energy efficiency and distributed generation. Over the past decade, consumers have increasingly adopted distributed energy resources (DERs), which has had significant impacts on the bulk power grid and distribution operations,” says Omar Saadeh, grid and distributed resources analyst at GTM Research.

Especially in the US, increased adoption of DERs at household level has obviously run alongside mandated programmes to stimulate larger scale distributed resources like utility-scale PV.

“Amidst all this substantial ongoing change, utilities are mandated with maintaining the delivery of reliable electricity – and they’re rightfully concerned. The variability of distributed and renewable generation produces significant operational challenges, such as two-way power flows, balancing discrepancies and so on – creating a higher demand for rapidly deployable grid flexibility,” Saadeh says.

The key to a smarter grid will rest therefore on how well the grid can respond and how not only its operators, but also the constituent branches of the distributed network, deal with information and react.

Big Data is a relatively loose buzzword that either means more than a terabyte – 1,000GB – of data, or can be taken to mean data too rich and vast to be processed by conventional techniques. In the instance of the grid and distributed energy resources, it is apt for the masses of inputs and variables that need to be taken into account.

For instance, Ragu Belur, one of the co-founders of microinverter and energy management specialist Enphase points out to PV Tech Power that his company collects around 850GBs of data daily, from a quarter of a million systems in 80 different countries.

Components of a distributed network, while not necessarily tied to each other with power cables, will be tied together by IT. To drop in another currently popular phrase, a network that can react predictively and in real-time to technical considerations and electricity consumption and production patterns will equate to – as one company interviewed called it – an “energy Internet of Things”.

Managing DERs

To manage and control the ebb and flow of electrons in a network that includes a high proportion of variable renewable power is clearly going to require some serious processing power and intelligent design.

While data and Big Data are collected and can be utilised from any number of points on a network, as Saadeh points out, it will ultimately be the grid operator’s responsibility to manage the overall picture. This will be done through a so-called distributed energy resource management system (DERMS).

“A DERMS is a software-based solution that increases an operator’s real-time visibility into its underlying distributed asset capabilities. Through such a system, distribution utilities will have the increased level of control and flexibility to more effectively manage the technical and economic challenges posed by an increasingly distributed grid,” Saadeh says.

“While global adoption trends may differ, as grid operators are faced with larger, more widespread DER adoption, distribution grid challenges must be addressed with regional solutions; of which we believe DERMS will become a vital part of most utilities’ system portfolios.”

Luckily, while the challenges posed are great, the necessity for greater connectivity between the network’s many agents fits with the wider trend of an increase in cloud-based computing and an increasingly shared obsession with getting the most out of data and analytics.

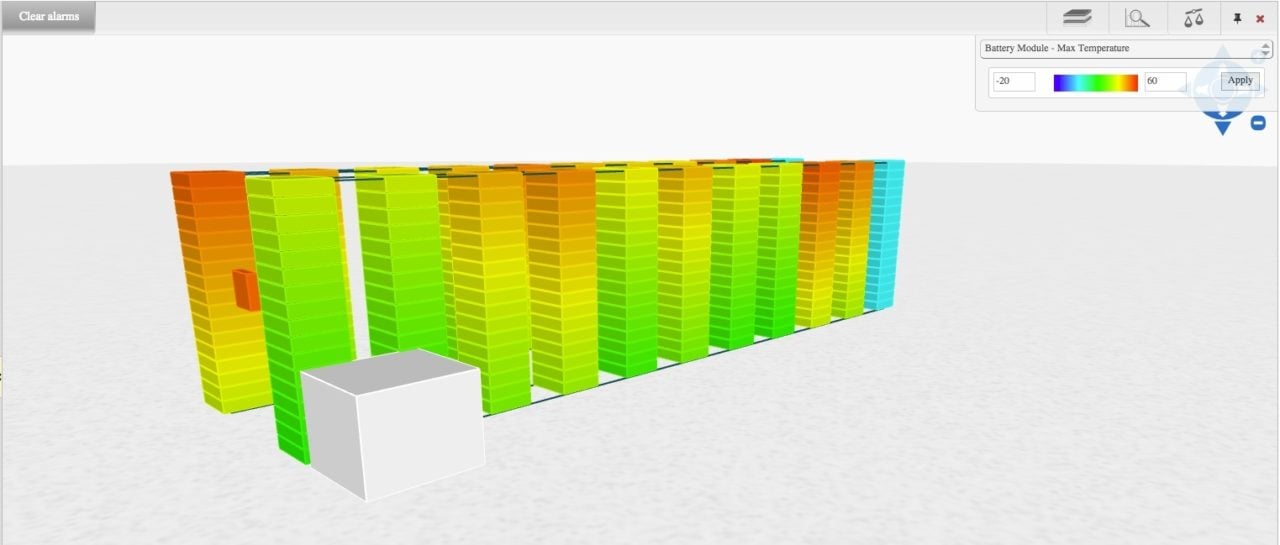

At the individual system level, storage is just a battery. PV is just a generator. Combined and connected to the grid, however, the use of data by the energy management system’s control software becomes absolutely key to not only performance, but also how it interacts with the grid and other distributed resources.

That data naturally includes predicting likely solar irradiance patterns and therefore expected output in watt-hours from a PV array, or analysing likely patterns of consumption from historical data. More subtly, it will also allow a PV-plus-storage system to respond to pricing signals, buying from the grid or other interconnected PV systems at the cheapest prices and selling at the highest.

According to sustainability group Rocky Mountain Institute, there are also 13 different grid services battery storage could potentially provide, some simultaneously. The ability to intelligently manage which of those services the system is providing at any time will also require fast and complex calculations.

John Jung from Greensmith Energy says that he advises customers to think of a smart distributed energy network as analogous to a network of distributed computers. Greensmith delivers complete storage systems, putting emphasis on software and data analytics. Greensmith claims to have had involvement in as much as one-third of all energy storage deployment in the US to date, with an approach Jung says is software-defined and battery-agnostic.

With a multitude of applications available, ranging from solar time-shifting and peak demand management to grid-balancing ancillary services like voltage regulation, different battery storage systems will require different battery types, system designs and configurations. Richness of data and the software to process it will drive forward and determine how well networks can cope with distributed resources, Jung says.

Clearly there is a wider trend that industries in general, especially in high tech, are using more data and analytics to assess performance and to control equipment. More specific to storage is the fact that batteries tend to be deployed on the weakest parts of the grid, especially where penetration of renewables is highest and causes greatest intermittency.

John Jung says that this requires “a lot of information on what’s happening in those locales, in order to be more precise about the way not only you programme and deploy energy storage from an applications standpoint but how you do that in an efficient way so you achieve ROI”. This could include making sure a system and its batteries are not “oversized” in the process of design, an expensive oversight.

According to Jung, storage is also in a “unique” position to be at the heart of what he and others have dubbed “Grid 2.0”. The majority of Greensmith’s customers have been US utilities, dealing not just with integrating renewables but also transmission infrastructure deferrals and displacing the use of gas plants to regulate grid frequency.

A smarter grid means different things to different people, but Jung believes it is about making the best use of existing assets as well as newer ones, to which information – data – is key. Storage, Jung says, “has a unique opportunity just because [of] the versatility and programmability and ‘multi-applicational’ aspect…to be part of Grid 2.0”

What can it do for solar and for the consumer?

Although Jung believes storage has a wholly unique opportunity, some in solar might disagree. While in the past the solar industry was primarily concerned with output, efficiency and performance at system level, there is an increased tendency to factor in and consider the impact of solar on the overall network, especially in regions where grid constraints cause interconnection queues and delays.

The central role of data in a complete energy system of the type offered by Enphase and others – solar, storage, inverter (or micro-inverters) and a software-defined control and management system – means the solar industry could be well-positioned too.

“Data as it relates to renewables – it is one tool to help in the integration of DG with the grid itself. The grid was not designed for this. Now for the first time, not only do we have generation occurring at the end nodes, in the distribution and transmission lines, but generation is now heading back into the grid,” Paul Nahi, Enphase’s CEO, says.

“The ability for renewables to actually add to grid stability is what I think is being missed by most individuals.”

We know more about what’s happening inside the network than even the utilities know. They don’t have access to this data

Nahi says the usefulness of data collected by micro-inverters and other parts of a solar energy system at all scales could help smash a common perception that adding diverse distributed generation resources means losing visibility of what is going on in the network, versus a few centrally located, centrally dispatched assets like coal and nuclear.

“The reality is that now, with those solar assets out there, we can leverage those assets to monitor the grid. Remember, in most cases we know more about what’s happening inside the network, in the distribution and transmission lines than even the utilities know. They don’t have access to this data.”

An example Nahi cited was Enphase’s remote upgrading of some 800,000 micro-inverters on Hawaiian PV systems at the beginning of this year. To make the systems comply with new grid protocols and therefore clear an interconnection backlog for thousands of new customers, Enphase was changing settings on devices in the field from its offices, Nahi says, “almost literally at the push of a button”.

Software and scalability

Nahi of Enphase and Jung of Greensmith would likely agree that the scalability of software-based solutions to network problems is one of its strongest suits. Jung claims Greensmith’s software teams “pump out new algorithms” every month. Around half of the company’s engineers come from industries such as telecoms and cloud computing, versed in dealing with the increased importance of issues like cyber-security that are more commonly associated with IT than energy.

Ability to scale is critical in an early-stage industry such as storage, where designing and building a new system from scratch could be prohibitively expensive. Using software to customise projects by feeding in different variables and metrics from a vast range available to a scalable platform could save projects money, Jung claims.

The scaling effect works both ways too. Mark Chew, who specialises in analytics for the Internet of Things at Big Data analysis and response company Autogrid, tells says that the recent success of large-scale solar has driven down the cost and grown the feasibility of distributed generation as a whole.

Autogrid, headquartered in the US but also active in Asia and Europe, analyses big data in energy networks and provides tailored solutions for customers that include big utilities, recently worked on what it and its partner, the energy producer and supplier Eneco in Holland, described as “the world’s first software-defined power plant”.

This new type of “virtual power plant” (VPP) integrates multiple distributed resources, adding up to 100MW of dispatchable resources including CHP and industrial demand response to Eneco’s portfolio. It has been built on Autogrid’s Predictive Controls platform, and will help integrate renewables, partly because its capability to respond to pricing signals from the Dutch transmission system operator’s wholesale markets will help pay balancing costs.

“For years utilities have done forecasting, optimisation to different degrees and different types of control,” Chew says.

“What’s changed about what they need is that where they used to be dispatching a relatively small number of resources to a large load, now utilities need to do all of that forecasting, optimisation and control, in real-time and at scale, so the number of calculations has increased by orders of magnitude.”

Aggregating and managing DG from control centres works out very economically, according to Chew.

“The cost to put in a VPP, software that can dispatch and control distributed resources as if they were conventional generation, is a fraction of the cost of building a new power plant, or putting in peaker plants. Plus the utility doesn’t have to buy new hardware, they can use scalable cloud-based solutions, there are no emissions [and] they have it distributed across their grid so it’s not limited to one place.”

Grid 2.0 and the new value chain

The role of data in the creation of tomorrow’s energy networks will be a central one. One project started up in June in Cologne, Germany, will use Internet of Things analytics to support what AGT, an IoT specialist and one of the five-year project’s participants, called “the building and running of low energy neighbourhoods”. Using IoT data and analytics to inform civic planners as well as grid and energy asset operators about their networks could lead to a reduction in primary energy consumption of as much as 60%, reduce emissions by a similar amount and get the city’s proportion of energy sourced from renewables also up to 60% by 2020. Two other European cities, Barcelona and Stockholm, have also been chosen for the EU project.

Solar, storage, smart meters, thermostats, demand response and your car, anything you can think of behind and in front of the meter will be defined not just on how well it performs, but also how well it informs and interacts with the rest of the network. Big Data is going to be huge.

This is an abridged version of an article that appeared in the December 2015 issue of PV Tech Power. To read the full version and other in-depth content from the journal, click here.